AsianScientist (Jul. 02, 2025) – Artificial Intelligence (AI) is revolutionizing various sector including medicine, particularly disease diagnoses. Studies have explored how well AI models can interpret clinical data, analyze patient histories, and suggest diagnoses. Research is beginning to map out where these models excel and where they fall short.

However, there is a lack of a comprehensive meta-analysis comparing the diagnostic performance of generative AI models with that of physicians. Such a comparison is essential to understand how well these models perform in real-world clinical settings.

Although individual studies have offered important insights, a systematic review was essential to bring together the findings and evaluate how these models measure up against traditional diagnoses made by physicians.

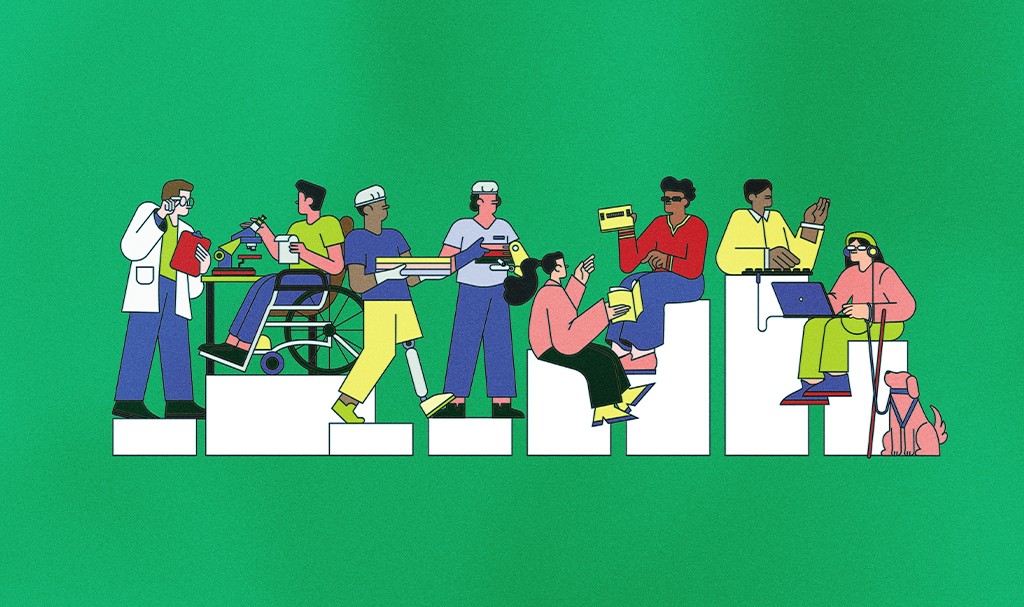

Researchers at Osaka Metropolitan University’s Graduate School of Medicine conducted a meta-analysis of generative AI’s diagnostic capabilities using 83 research papers published between June 2018 and June 2024, covering a wide range of medical specialties. Among the large language models (LLMs) analyzed, ChatGPT was the most studied.

The comparative evaluation revealed that medical specialists had a 15.8 percent higher diagnostic accuracy than generative AI. The average diagnostic accuracy of generative AI was 52.1 percent, with the latest models occasionally demonstrating accuracy comparable to that of non-specialist doctors.

The findings were published in npj Digital Medicine.

“This research shows that generative AI’s diagnostic capabilities are comparable to non-specialist doctors. It could be used in medical education to support non-specialist doctors and assist in diagnostics in areas with limited medical resources,” said Hirotaka Takita, lecturer at the Department of Diagnostic and Interventional Radiology, Osaka Metropolitan University, and an author of the study.

“Further research, such as evaluations in more complex clinical scenarios, performance evaluations using actual medical records, improving the transparency of AI decision-making, and verification in diverse patient groups, is needed to verify AI’s capabilities,” he added.

Research that contrasts the performance of generative AI with that of physicians provides valuable information for medical training.

Expert doctors are still much more accurate than AI at present, highlighting the importance of human judgment.

However, since AI performs at a level comparable to non-expert doctors, it could be a valuable tool in training medical students and residents. The paper noted that AI could help simulate real-life cases, provide feedback, and support learning through practice.

—

Source: Osaka Metropolitan University; Image: AI and Asian Scientist Magazine

You can find the article at A systematic review and meta-analysis of diagnostic performance comparison between generative AI and physicians

Disclaimer: This article does not necessarily reflect the views of AsianScientist or its staff.