AsianScientist (Jan. 30, 2020) – Cities are quite possibly the most complex things mankind has ever made. Each one teems with millions of people, embedded in interlocking energy, transport and building systems that are evolving by the minute. The dynamism of life in the city is what draws people to them; but it is also what makes cities messy, chaotic and unpredictable—a nightmare for city planners and municipal authorities trying to ensure the reliability of essential services.

Planning and managing the world’s ever-growing cities has never been more important, directly impacting the lives of 60 percent of the population by 2030. Cities generate 80 percent of global gross domestic product, but they are also responsible for 70 percent of global greenhouse gas emissions, making the efficient running of cities an issue of planetary significance. These problems are particularly pressing for Asia, which is expected to have at least 30 megacities by as soon as 2025.

While data can never tell the full story about any living organism, much less one as vast and multifaceted as an entire city, it is now possible to test increasingly realistic scenarios using simulation. This is where high performance computing (HPC), which has been used to simulate everything from aircraft wings to the birth of the entire universe, can help make cities smarter, said Mr. Charles Catlett, founding director of the Urban Center for Computation and Data (Urban CCD) at the University of Chicago and senior computer scientist at the US Department of Energy’s (DOE) Argonne National Laboratory.

“When you’re looking at something as complicated as a city, it’s not like you can come up with an optimal district design because it’s not a closed system or a fixed design like a jet nozzle or something that you might do with traditional fluid dynamics,” Catlett said. “Rather, what we are looking for is a sense of the possible and the probable,” Catlett told Supercomputing Asia.

Two models are better than one

One very real possibility that the City of Chicago is bracing itself for is extreme heat events. In 1995, a heatwave claimed the lives of over 700 people over a five-day period. The heatwave was unusually severe as the unrelentingly high temperatures were amplified by urban heat islands caused by the high concentration of buildings in Chicago’s downtown. Already due for the next big heatwave since 1995-like conditions are thought to occur every 23 years, the city also needs to factor in the impact of climate change, which might make such devastating heatwaves occur as frequently as three times a year by 2100.

Understanding how a heatwave might impact the city, however, is much more complicated than simply simulating the weather. While undoubtedly an important parameter and a challenge to simulate in and of itself, the weather is merely one factor contributing to the potential fallout of the next big heatwave.

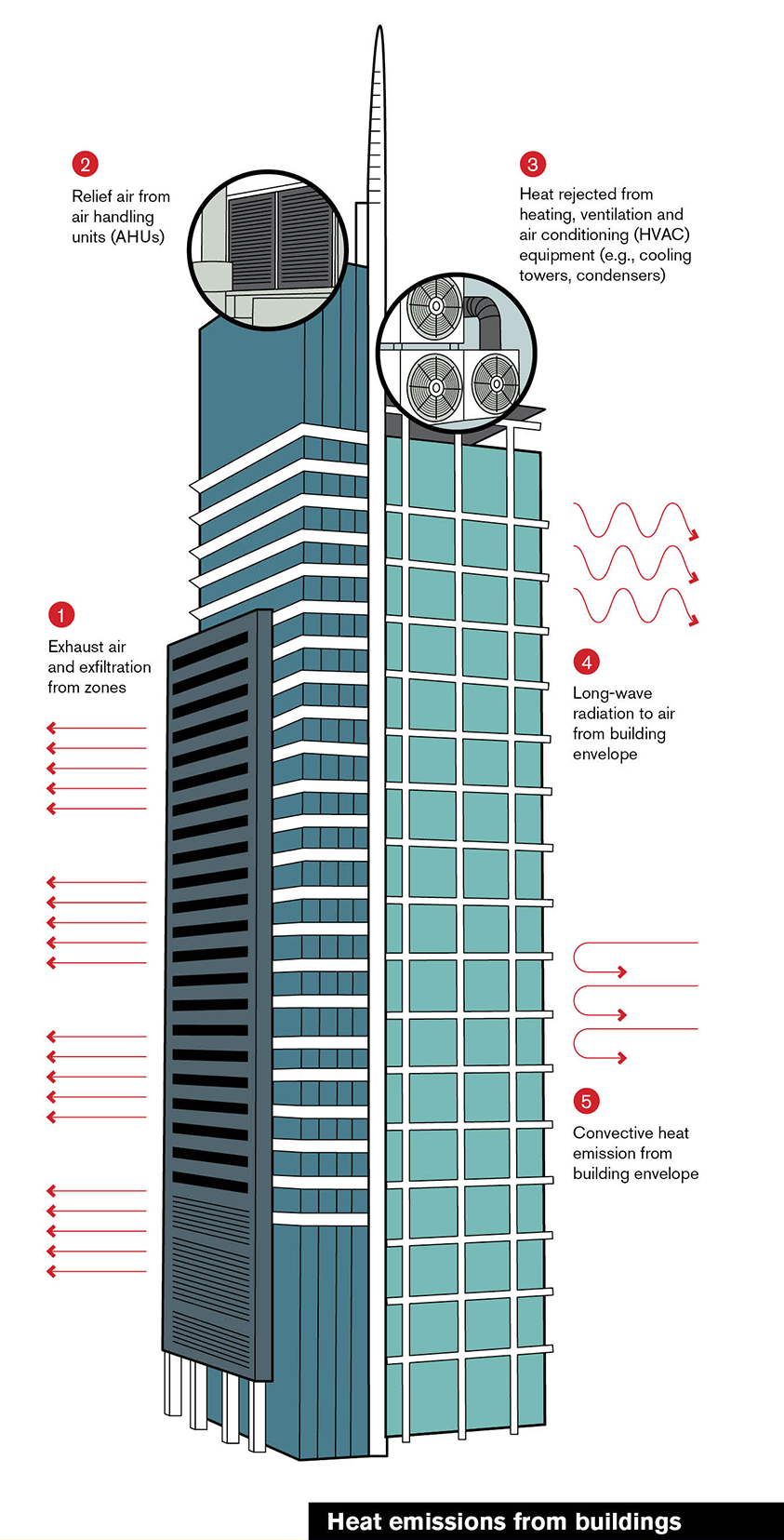

As in the case of 1995, how the buildings trap and radiate heat and the presence or absence of green spaces are crucial determinants of local temperatures; these in turn are influenced over time by socioeconomic factors such as building demand and zoning policies. Furthermore, the common response to heatwaves is to increase the use of air conditioning, which paradoxically drives up the amount of heat generated by buildings and places a strain on the energy supply.

“If I were the City of Chicago, I would want to know which of the buildings in my city are not going to be able to keep up with the demand for cooling during a heatwave. I’m particularly interested not so much in office buildings, but perhaps hospitals, retirement homes or public housing, where those most likely to suffer from a heatwave, like the elderly, live,” Catlett explained. “Then I want to ask which of the buildings are able to keep up with the demand for cooling, but do so with inordinately high energy consumption.”

“With that information, you can take it one step further and identify the buildings that are likely to have the most difficulty, then do a HPC ensemble that allows you to evaluate multiple energy retrofits for these buildings, and work with the owners to implement the optimal retrofits for their buildings.”

What this looks like in practice involves coupling several computational models together. To be able to address the questions that Catlett and his team are asking, a weather simulation forecasting the temperatures during a hypothetical heatwave would have to interact with a separate simulation modelling the energy performance and demand for each building.

“What we did as a proof of concept through the DOE Exascale Computing Project was couple DOE’s building energy model, called EnergyPlus, with an equally widely used weather model, Weather Research and Forecasting (WRF). We ran WRF with a spatial resolution of 100m2 and 50 vertical layers,” Catlett said.

“EnergyPlus typically runs a single building on a PC, taking anywhere from several hours to several days to compute an entire year of hourly weather. Instead, we put one building on each computational core, using 20,000 cores for as many buildings, and used WRF hourly output to compute energy performance and demand for each building during a multi-day heat event. We then created an ensemble workflow to evaluate multiple possible retrofit strategies for a subset of these buildings, looking to optimize the retrofit investment relative to cost-benefit.”

For example, this approach might reveal that a retrofitting improvement that costs $10 million would lead to a 20 percent improvement in building performance through the heatwave while a $12 million retrofit improves performance by 35 percent.

“By running these ensembles with HPC, you can ask hundreds or thousands of different questions,” Catlett said. “That way, you could at least guide the building owner in making those investments.”

Taking the city’s pulse

As useful as they might be, simulations still ultimately need to be verified with real-world data. But what kind of data would help you understand the health of a city? And importantly, how can that data be captured, analyzed, moved and stored in a safe and cost-effective way?

“What measurements would assist the scientific community working with cities on diagnosing things like the impact of air quality on school performance, noise on the health of elderly residents, or the flow of pedestrians and safety issues in the downtown area? These are some of the questions that have driven the measurement strategies of the Array of Things,” Catlett said.

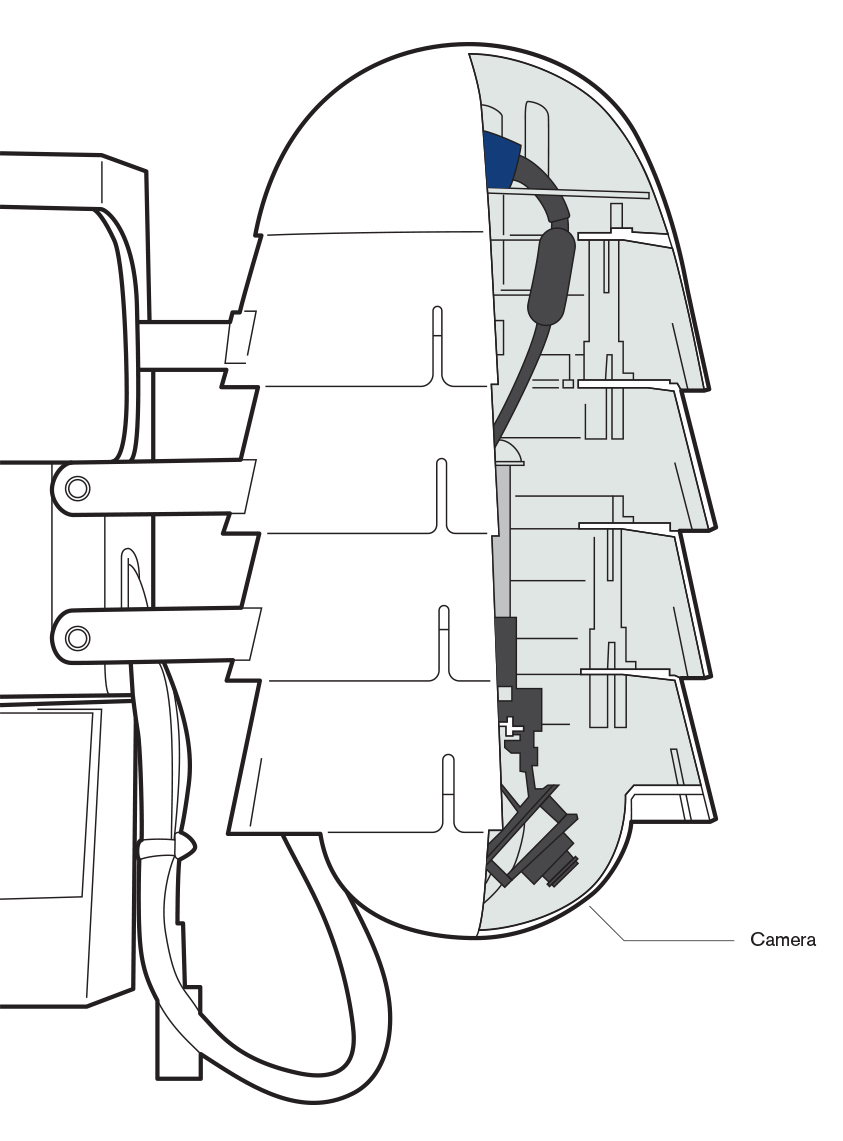

A play on words combining the Internet of Things and array telescopes, the Array of Things is an Urban CCD project that seeks to use a network of sensors as a city-wide health tracker, analogous to a Fitbit for cities. Designed to be modular to accommodate future sensors, each node can measure environmental factors like temperature, pressure, humidity, light and even ambient sound intensity; common air pollutants like particulate matter, ozone and carbon monoxide; and pedestrian and vehicular traffic. Machine learning is then applied to analyze images locally within each ‘node.’

In 2020, with planned upgrades to the machine learning hardware in the nodes, more sophisticated machine learning could enable the sensors to detect flooding, detailed flow of traffic at an intersection or among people in a park, and other factors of interest to city planners.

All data captured by the sensors is open to the public and available for free on a web-based portal and through a near-real-time API, allowing everyone from scientists to the residents themselves to explore or even develop apps based on the data. This open source approach extends beyond the data itself to the underlying software and hardware platform, called Waggle, developed by Argonne National Laboratory, with all software published as opensource to encourage participation and transparency.

“We’ve done a major test in Chicago with about 120 nodes, some of which have been up for two years. We’ve also recently received a new grant from the US National Science Foundation to take our Waggle platform and apply it not just to cities, but to ecological and environmental projects as well,” Catlett said.